A couple of years ago, it was fascinating when we said “Alexa, play Shah Rukh Khan songs” and it instantly delivered. It was unimaginable how Artificial Intelligence or AI would creep into every aspect of our lives. Now, we have tools that help us write, research, code, compute data, even design, and so much more every day. While the will-AI-take-our-jobs debate rages on, we tried out several AI-powered image-generating tools. Fascinating and scary, what the tools generated in seconds was wild, but also laden with problematic nuances.

We Asked AI to Depict Indian Scenes. It Generated Sexist, Classist, Biased Images.

Fascinating and scary, what the tools generated in seconds was wild, but also laden with problematic nuances.

Most of the images were creative, artsy, and generated in just a few seconds. However, many were laden with hard-to-miss sexist, patriarchal, and classist undertones. AI is trained on information available freely on the internet, which inherently has its own problems. Biases, stereotypes, and prejudices come along with the “art”.

Sexism Begins in the Kitchen

When we typed in “scene from an Indian kitchen” in Hotpot.ai, the black and white art generated by the tool showed a woman using what looks like a clay oven from centuries ago.

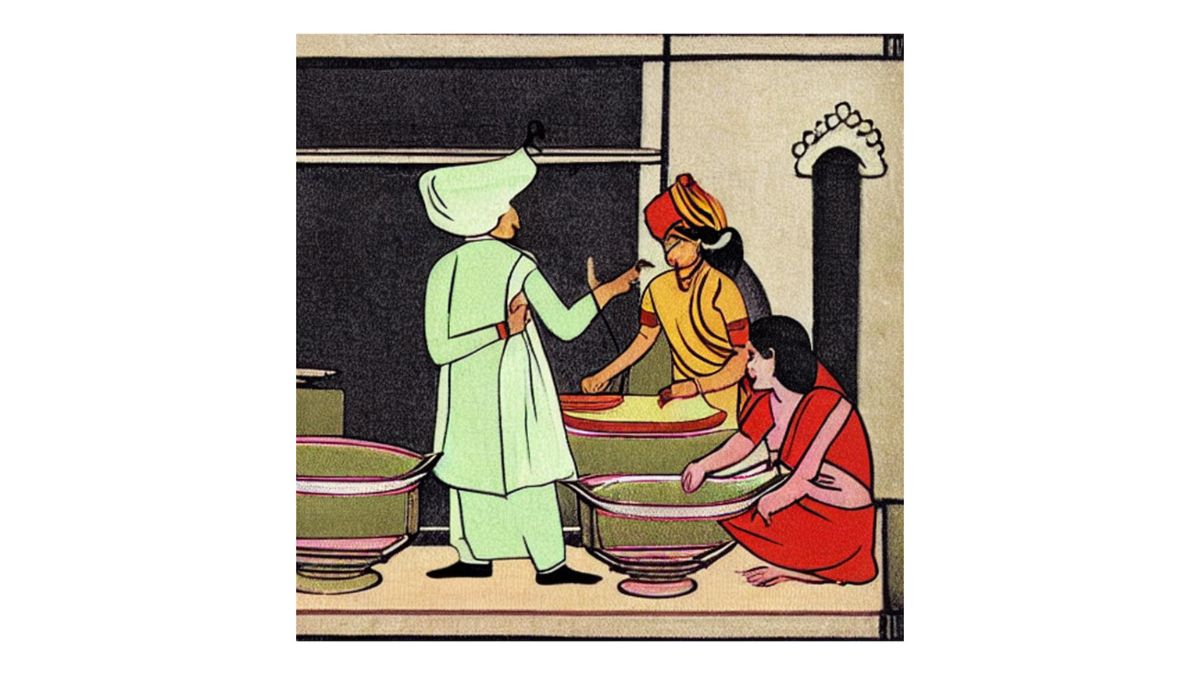

Another tool, Picsart, which has generative AI in a testing phase, showed an artwork with women doing the labour, while a man seemed to be standing and giving instructions.

Another tool, Picsart, which has generative AI in a testing phase, showed an artwork with women doing the labour, while a man seemed to be standing and giving instructions.

The age-old concept of the kitchen and housework being a woman’s domain was evident.

The Bing Image Creator powered by Dall-e, in a smarter move, refrained from showing humans at all in the frame. It threw up images of food being cooked instead. Steel utensils, the smoke, and colourful food did look quite realistic.

Success in AI’s World: Women Conspicuously Absent

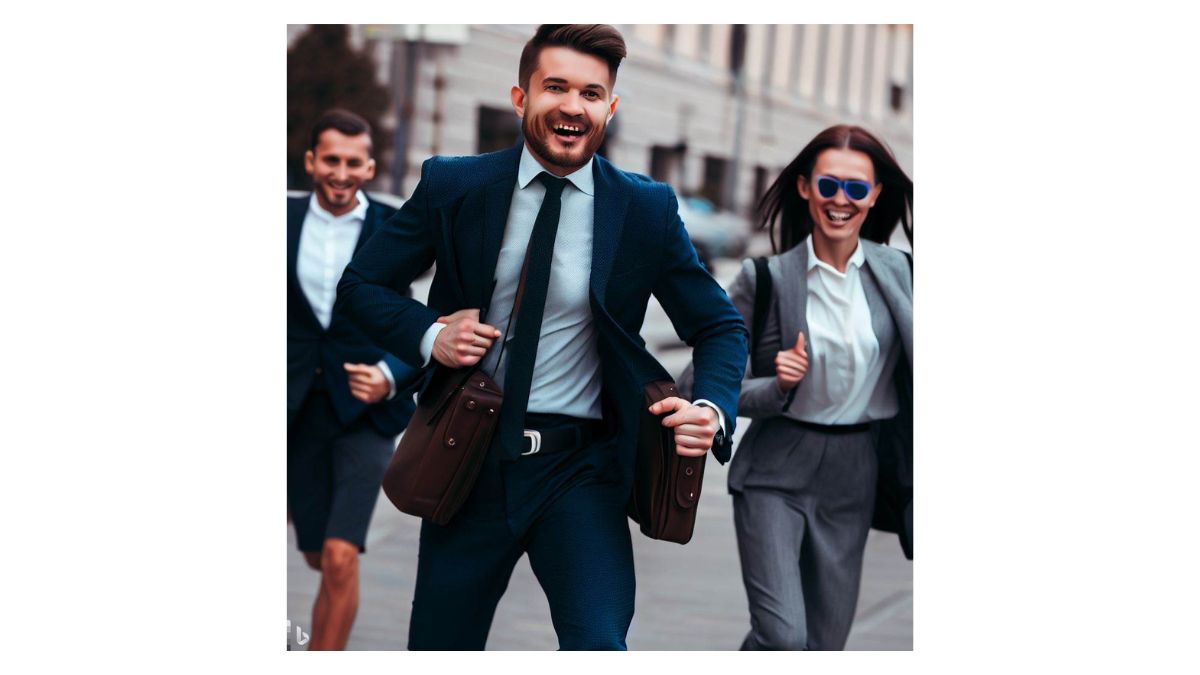

Next, when we typed “successful Indians” into Bing Image Creator, the results generated were straight-up funny.

The odd dhotis coupled with ties and black suitcases had us laughing out loud. What information is this AI even training on that describes Indian office clothing to be this? Once we got over the hilarious, unrealistic clothing, we wondered… why are there no women?

We tried “successful Europeans” next, and voila! Usual office wear, and women!

Another thing that was apparent was that regardless of society or area or cultural context, success, as displayed by AI, is about strutting to work in formals (or dhotis!). It seemed apparent that “success” is underlined by money, a certain societal status, and a homogeneous idea of office. They have a distinct corporate-y feel with the dominance of shirts, blazers, and ties, and are skewed in the favour of men cheerily going to work in the morning.

Boardrooms and office meetings, too, in images generated by Hotpot, showed far more men than women. The disparity highlighted patriarchal gender roles, which restricted women to the domain of housework, while men stepped out to work and earn a living.

Having noticed the skewed gender ratios, we searched for “women at work” next in Bing Creator. The AI greeted us with shirt-clad women, presumably in swanky offices.

Noticing the scarcity of brown women, I added just another word at the end of the search term, to make it “women at work India”. This is what the AI-powered tool generated.

A significantly poorer country, it seemed, where “work” was symbolised by being around what looked like utensils. The sarees and the head veils were common elements. It clearly looked like scenes from villages or the unorganised sector. The classist undertones were evident. Despite all the push that the country has seen, AI couldn't place Indian women in a corporate setup, even in any one of the images.

Child Care is A Mother’s Job

To cover another aspect of society that has traditionally been deemed a woman’s responsibility, we tried to explore how childcare looks in different parts of the world, through the eyes of Dall-e. First, we tried Europe and America, and then India.

The common thread in all three images? A woman, presumably the mother, caring for her child. Regardless of region, ethnicity, and race, it was a woman in the images, taking care of the child.

The “Yellow” Filter

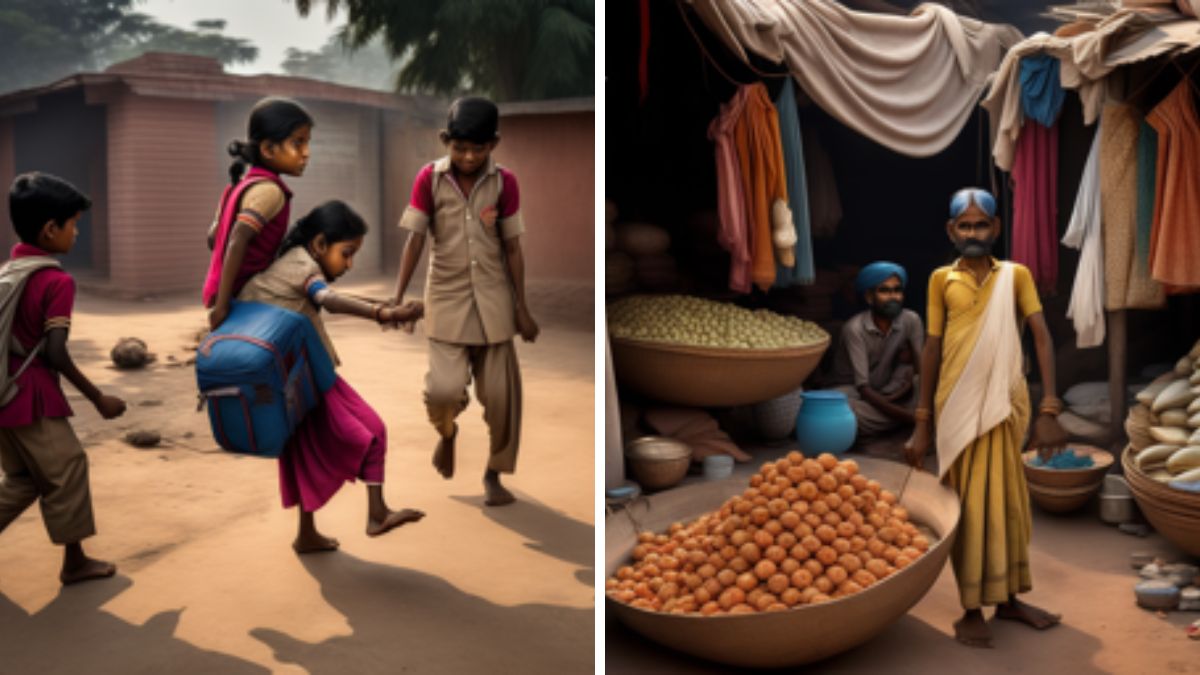

A striking fact in the above images was how poor the Indian scene looked, so we tried to explore how other scenes from society would look. When we searched for a school and a marketplace on Hotpot.ai. The images that popped up, all looked from a particular class of society.

They have that yellow-brown tone to show a dusty overlay, the common trope often used to show developing countries or the global south in movies from the West. Elisabeth Sherman, writing for Matador Network in 2020, had described this as “almost always used in movies that take place in India, Mexico, or Southeast Asia… [and is] supposed to depict warm, tropical, dry climates. But it makes the landscape in question look jaundiced and unhealthy, adding an almost dirty or grimy sheen to the scene.”

Stereotype of Beauty

Ideas of beauty have traditionally had patriarchal roots, with attributes like fair and thin being most sought after. Weight loss fads, fairness creams, and most of the beauty industry have marketed themselves on the backs of these insecurities of women.

A “beautiful girl from India” was indeed, fair, thin, and even decked up like a bride! The many conversations around inclusivity and body positivity seemed to have escaped AI in the Bing Image Creator. The images were as conventionally “beautiful” as can be.

“Models from India”, however, yielded dark-skinned, dark-haired skinny girls. They are seen sporting plunging necklines and chiseled collarbones. We wondered, however, if this was slightly more inclusive or if it was just the brown girl stereotype at play.

We tried “movie posters showing Indian girls” next. They seemed to be posters from several decades ago, due to the illustration style and colours. It was funny because despite Indian actors and movies being celebrated all over the world, AI still threw up these archaic hand-painted posters. They weren’t particularly representative of modern-day movie posters. Movies have gone on to include more than just women dancing in scanty clothing, but AI is perhaps yet to get there.

After sifting through many different search queries and what images they showed, it seemed obvious that the AI has been trained on generic information, and lacks nuances that challenge conventional societal norms. This is where artists and humans have the upper hand so far.

Being aware of evident biases and historical societal inequities is where humans will perhaps continue to outperform AI. To understand this better, we turned to an AI expert.

Bias in AI or a Reflection of Our Society?

Bias in AI is a whole domain being studied by researchers.

Debarka Sengupta, Associate Professor, Centre for Artificial Intelligence, IIIT Delhi, highlighted that the biases are a reflection of our society. “Machine learning algorithms are no smarter, as of now, but they are being made smarter because people are pointing out these things more and more,” he says, underlining that so far AI is trained on information readily and already available on the internet, uploaded by humans.

He adds that if you goes deeper into how machine learning algorithms function, you will realise that they are actually trying to minimise error without caring about caste, class, creed, and other factors. “Because your representative samples (which the AI is trained on) have a high concentration of a certain class of people, it basically learns the biases of that data,” he says.

Also read:Perils & Ethics Of ChatGPT In Dating: I Tried It So You Don’t Have To

When asked about if these biases can be eliminated, he says that it is possible if biases can be defined. “For example, men versus women can be sorted. But otherwise, you know, it's very difficult,” he says. In technical terms, inputs to counter biases are called ‘covariates’, which means an independent variable that can influence the outcome of a given statistical trial, but which is not of direct interest. “It is a challenging task. It's an add-on amount of work, an add-on computation that has to be done, to sort of circumvent [the biases].”

However, he is optimistic that work is being done to eliminate biases. “Social scientists are collaborating with AI researchers to help them understand what sort of biases should be taken care of,” he says.

“I can see more and more women coming in as heads of technical teams. So it's also the representation that’ll help drive away biases. The sources of bias within the technical team who are making and training AI needs diversity. If you have more people coming from different geographies, races, and sects, then eventually that will also have its effect in mitigating biases.”

Debarka also points to how these biases of algorithms can indeed impact lives at a larger level. An investigation was recently raised against Apple's credit card in the US after complaints said that it offered different credit limits for men and women.

Back to playing with the AI tools, we asked Bing to show a “progressive society”, and it generated a set of stick figures of some sort. It looked like a society of robots, in a futuristic era, given the neon colours, light leaks, and boxes. It seemed AI was saying society would be better when it will be filled with more of the same kind – a homogenous group. But, would it really?